Our species has begun to scrute the inscrutable shoggoth! With Matt Freeman :)

LINKS

Anthropic's latest AI Safety research paper, on interpretability

Anthropic is hiring

Episode 93 of The Mind Killer

Talkin' Fallout

VibeCamp

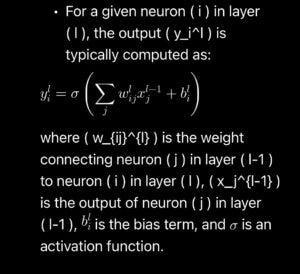

0:00:17 – A Layman's AI Refresher

0:21:06 – Aligned By Default

0:50:56 – Highlights from Anthropic's Latest Interpretability Paper

1:26:47 – Guild of the Rose Update

1:29:40 – Going to VibeCamp

1:37:05 – Feedback

1:43:58 – Less Wrong Posts

1:57:30 – Thank the Patron

Our Patreon, or if you prefer Our SubStack

Hey look, we have a discord! What could possibly go wrong?

We now partner with The Guild of the Rose, check them out.

Rationality: From AI to Zombies, The Podcast

LessWrong Sequence Posts Discussed in this Episode:

If You Demand Magic, Magic Won’t Help

Next Sequence Posts:

213 - Are Transformer Models Aligned By Default?